This was a piece I originally wrote for a different blog which has since been shut down, so it won’t get posted there. I hate to waste all that effort though, so I’m posting it here. And yes, I previously posted the video output from this process here, but this is the back story — sorta.

My son is a huge fan of most anything animated. A typical weekend morning finds him on the couch in a near comatose state staring blankly at the Cartoon Network on TV. It doesn’t matter which cartoon is on, just that one is. Were it not for the preternatural siren’s lure of frozen waffles in the kitchen beckoning him out of the living room, he’d be there until deep in the afternoon.

It was only natural then to think of him when I started toying with the idea of animating still photographs. But maybe I’m getting ahead of myself. You see, the practical line between videos and still images is eroding. Most any digital camera will capture stills or video, and most any digital picture frame will display both. I’ve seen people take a short video clip rather than taking several photos, and a burst mode camera takes several stills that when combined sequentially amount to video. Further, it’s easy to take a still from a video by simply extracting a frame. But how might you take a still photograph and make it a video? That was the missing link to be found. How could I take that lifeless motionless lump on the couch, and make him into the cartoon that he so admired?

I researched the topic for awhile and stumbled across the idea of digital puppetry. Digital puppetry is the manipulation of digitally animated figures. It differs from traditional computer animation in that the characters are manipulated in real time rather than frame by frame. It is generally considered a form of machinima. Digital puppetry can be done using full 3D character models. Fortunately though, digital puppetry can also be applied to 2D images. The result is a sort of “Harry Potter picture” effect—a photograph that moves somewhat like a short video clip. A version of this technique was used to create the E-Trade commercial with the talking baby that ran during the Superbowl. But that animation used digital puppetry on a video source, which required some very high-end expensive commercial tools and a lot of talented artists to create it. Unfortunately, I lack that kind of budget, and frankly, that kind of artistic talent. However, some more digging found a much more reasonably priced tool that started from a 2D image source and seemed pretty easy to use. The tool is Reallusion’s CrazyTalk5. The system does its best work with more cartoonish animations, but with a little care it works well with photographs of real people.

I ran the idea for the project by my son, and he thought the whole concept was pretty darn cool and readily volunteered his image and voice for the project. We were ready to begin.

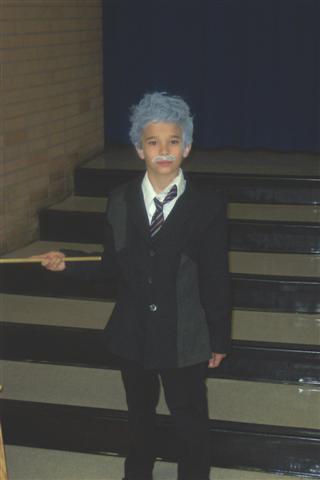

The process starts with selecting an ordinary digital photo. The photo needs to have a subject with an unobstructed forward looking face, but otherwise can be anything. It doesn’t even need to be human. After searching my picture archive we settled on a depiction from his school’s Wax Museum exhibit from a couple years ago. The museum is an annual happening where the kids all need to dress up as historical figures and pose for photo-snapping parents. My nerd-ling opted to be Albert Einstein. Go figure.

Next I needed an audio clip. I wrote a short script based on a quote attributed to Einstein and got my son to perform it into a microphone. Clearly his participation in drama club was paying off.

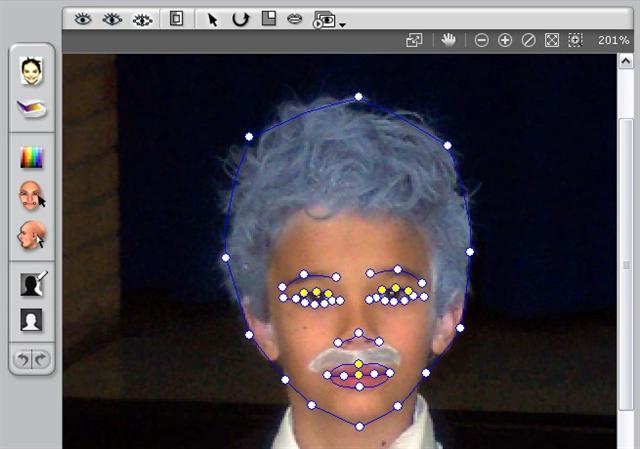

I now had the fodder ready to feed into the tool. Creating an animation started with cropping the photo since I was only going to be animating the head. Then I needed to construct a detailed face map by identifying the points of interest for animation. You can see that the majority of points are on the eyes and mouth as they are among the more expressive features. But the eyebrows, chin, and nose also get identified. From all of this data, areas such as the cheekbones and jaw line are automatically identified for animation as well.

Next, the image is masked to separate the background from the character.

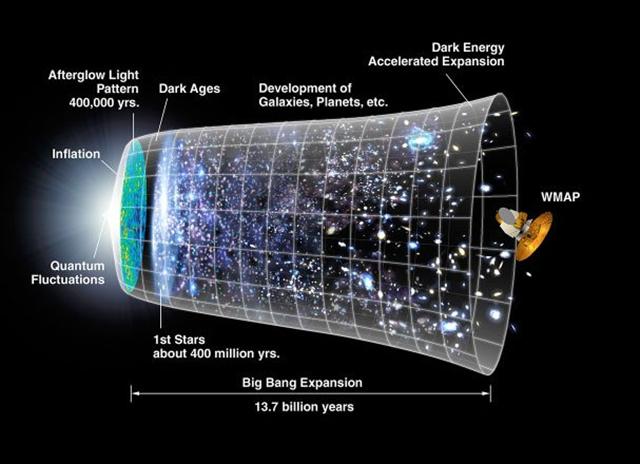

This is not always necessary, but I wanted to provide a more interesting setting than the darkened school stage in the original photo. So I selected an Einstein-ish space-time poster.

The masking also helps to more cleanly delineate the edges of the animation.

At this point, I had a useable animation model to work with. What remained was the puppetry part. Puppeteering is the creation of a script, which is started by marrying the audio file to the model. The tool provides automated lip syncing based on the audio used. It also provides puppeteering controls which allow the addition of tracks controlling expression, eye movement, head movement, and camera controls. This is all built up in successive layers. The process is somewhat akin to image editing in tools like PhotoShop where imaging layers are created independently and then merged to create the final product. The difference is that this layering has a time component to it in addition to the visual and audio components.

The result can then be exported to a video file for viewing, sharing, or even saving it for future threats to trot it out for the purpose of creating an embarrassing moment in front of the victim’s subject’s friends.

The process is capable of much more extremes of expression, but the results begin to wander from the photorealistic to the cartoonish. That’s not always a bad thing. Given that the technique can animate your cat or some of the artwork on your fridge, this can definitely be a feature.

There are obvious opportunities to simplify and automate steps of this process, but even with this tool set, the task is well within the reach of most digital photo enthusiasts. And it wouldn’t take a whole lot of imagination for this to be a really interesting application for still photos. This is like JibJab on steroids. Wouldn’t history class have been more interesting if Tom Jefferson told you about it himself? Surely there’s something you’ve always imagined your spouse/child/mother/boss saying to you. Now you can arrange that! You can even post it on YouTube as evidence they did.

Personally, I’m thinking of putting together a conversational AI engine behind an animation of myself so I can haunt proffer advice to my children long after my death. Kind of like Jor-El did for Superman—give or take the glowing crystals. It‘s the least I can do since I can’t figure out how to give them super powers.